Hadoop Servers: Navigating Big Data Hosting

Big data has transformed the way businesses operate, allowing them to make informed decisions based on vast amounts of data. However, managing and analyzing big data can be challenging, especially for programmers who are not familiar with the technology. In this article, we will explore how Hadoop servers can help programmers navigate the world of big data hosting.

What are Hadoop Servers?

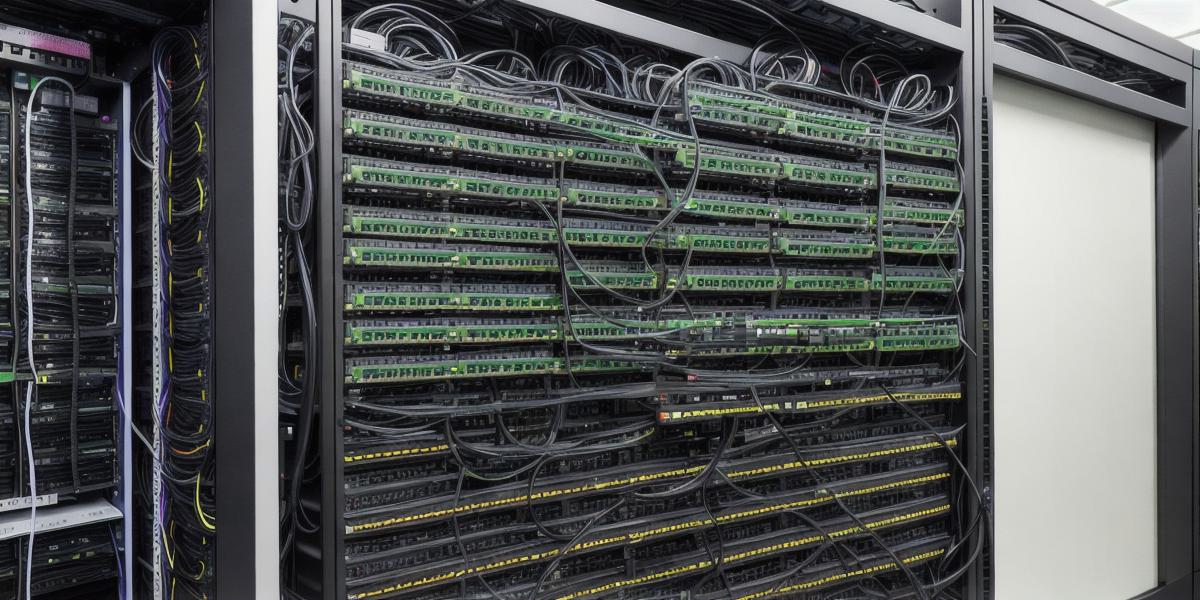

Hadoop servers are a cluster of computers that work together to store, process, and analyze large amounts of data. Hadoop is an open-source software framework that allows users to write applications in languages like Java, Python, and C++. The Hadoop cluster consists of two main components: the Master Node and the Data Nodes.

The Master Node acts as a centralized control center for the Hadoop cluster, managing tasks and allocating resources. It runs the Hadoop NameNode process, which is responsible for maintaining the file system namespace hierarchy and the metadata storage. The Data Nodes run the DataNode process, which stores data blocks and responds to client requests for data retrieval.

Benefits of using Hadoop Servers

One of the primary benefits of using Hadoop servers is their ability to scale horizontally. This means that users can add more nodes to the cluster as needed, allowing them to handle growing amounts of data without sacrificing performance. Additionally, Hadoop servers are highly fault-tolerant, meaning that if one node fails, the system can continue to operate seamlessly without any interruption in service.

Another benefit of using Hadoop servers is their ability to process unstructured and semi-structured data. This includes data from sources like social media, web logs, and sensors. Hadoop’s MapReduce algorithm is particularly well-suited for this type of data processing, as it allows users to apply complex transformations to large datasets without the need for specialized knowledge or expertise.

Case Study: Netflix uses Hadoop Servers to process petabytes of customer viewing data

Netflix is a great example of how Hadoop servers can be used in practice. The streaming service processes over 100 petabytes of customer viewing data each day, which includes information like user preferences, ratings, and search queries. Netflix uses a Hadoop cluster consisting of thousands of nodes to process this data in real-time, allowing them to provide personalized recommendations to their users.

FAQs

Q: What is the difference between a Master Node and a Data Node?

A: The Master Node is responsible for managing tasks and allocating resources, while the Data Nodes store and retrieve data blocks.

Q: How can I scale my Hadoop cluster?

A: Users can add more nodes to their cluster as needed, allowing them to handle growing amounts of data without sacrificing performance.

Q: What is MapReduce?

A: MapReduce is an algorithm used by Hadoop to process large datasets, applying complex transformations without the need for specialized knowledge or expertise.

Conclusion

Hadoop servers are a powerful tool for programmers navigating the world of big data hosting. With their ability to scale horizontally and process unstructured and semi-structured data, Hadoop servers can help businesses make informed decisions based on vast amounts of data. As we’ve seen in the Netflix case study, Hadoop servers are a practical solution for organizations looking to handle large volumes of data in real-time.