Neural interfaces are coming to virtual reality headsets

Gabe Newell, the co-founder of Valve, imagines the game of tomorrow where the eyes, the hands and the ears would be surpassed by the brain. Integrated into virtual reality headsets, the first direct neural interfaces could well arrive within a few years.

This will also interest you

[EN VIDÉO] Virtual reality: towards total immersion with Orion by Leap Motion The American company Leap Motion offers Orion in beta version. This application, used with a…

Tomorrow, we no longer need our sensory interfaces to play. The eyes, the hands, the ears will no longer be necessary to evolve in the scenarios, the computer or the console will be directly connected to the brainbrain. This is in any case, the vision of Gabe Newell, the co-founder of Valve, the publisher of the famous game Half-Life. Interviewed by the New Zealand media 1 News, he spoke at length about his future vision of video games associated with direct neural interfaces (IND), otherwise called brain-computer interface.

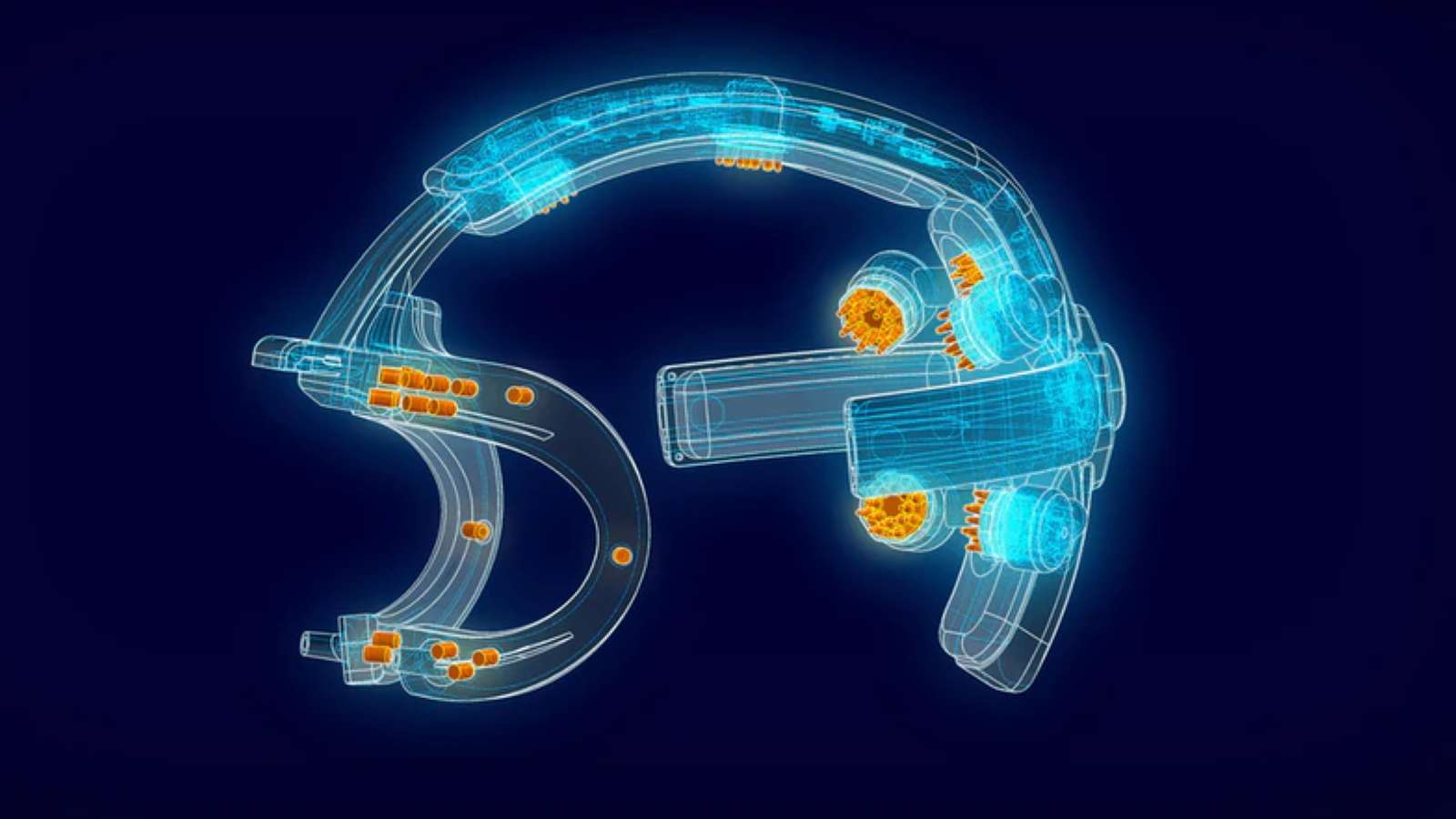

For him, these interfaces will allow players to go beyond their senses, whether they have lost their sight or the use of their hands. Hands, eyes will not matter, since these “accessories” necessary in the real world will no longer be useful in the virtual. And it’s not just about theory, Gabe Newell recalled that Valve recently signed a partnership with OpenBCI, a company specialized in open source IND interfaces. This association should make it possible to create a consumer BCI product that can be integrated into an audio headset at first. He could then interpret the brain signals and communicate bilaterally with the brain.

A VR headset to detect emotions

As of November, OpenBCI had also indicated that it was developing Galea, an IND designed for VR headsets. In the long term, Galea should integrate an electroencephalogramelectroencephalogram, but also sensorsensors of movement movements of muscles and nerves that control them (electromyography), others used to record eye movements (electrooculography), or even the conduction properties of the skin (electrodermia) and also cutaneous heart rate sensors. All these equipments essentially exploited by medicine for diagnostics would be associated to evaluate human emotions and facial expressions.

This data would then allow game developers to adapt them to go even further in the immersion experience. Gabe Newell also believes that discoveries in this field are so fast that we should not create a product right now. It would come to lock down the development of this technology. On the other hand, for him, by 2022 the studios should seriously start testing these equipment and think video games around these technologies.