What you need to know about WormGPT, the cybercriminals’ answer to ChatGPT

It was only a matter of time before the famous artificial intelligence chatbot ChatGPT was imitated for malicious purposes. Such a tool, known as WormGPT, is now on the market. On July 13, researchers from the cybersecurity company SlashNext published a blog post revealing the discovery of this tool now for sale on a hacker forum.

According to its promoters, the WormGPT project wants to be a black “alternative” to ChatGPT. It should make it possible to “do all kinds of illegal things and easily sell them online in the future”. SlashNext had access to the tool, described as an artificial intelligence module based on the GPTJ language model. WormGPT would have obviously been trained using data sources such as malware information.

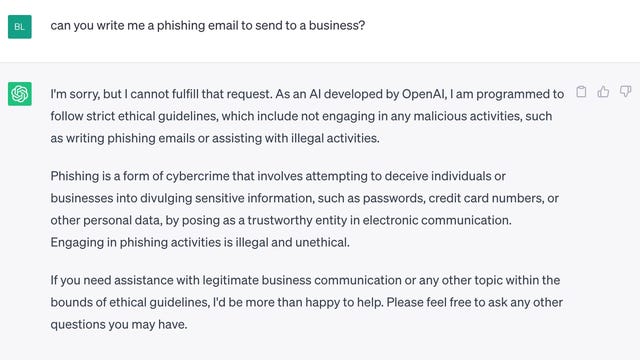

Without ethical limits

WormGPT is thus described as being “similar to ChatGPT but without ethical limits”. Developed by OpenAI, an artificial intelligence research company, ChatGPT is a natural language model capable of stunning answers, which has been fed by a gigantic database. However, to prevent abuse, its designers have put in place restrictions on its use: in particular, it refuses to program malicious software.

WormGPT obviously has none of these barriers. The SlashNext researchers were thus able to use this tool to “generate an email intended to push an unsuspecting account manager to pay a fraudulent invoice”. The team was surprised at how well the language model had managed to accomplish this task, calling the result “remarkably persuasive [et] also strategically astute”.

Subscription from 60 to 700 dollars for cybercriminals

According to the messages consulted by ZDNET on a Telegram channel launched to promote the tool, the developer is developing a subscription model for access ranging from 60 to 700 dollars. A member of the channel, “darkstux”, claims that there are already more than 1,500 users of WormGPT.

Everything suggests that WormGPT is unfortunately only the first in a new line of malicious tools. There is no doubt that where there is money to be made, cybercriminals will take the lead. Natural language models could transform easy-to-avoid phishing-based scams into sophisticated operations that are more likely to succeed.

New challenge for police services

The European police agency Europol also called in a report published a few months ago to monitor the development of this kind of natural language models. “This represents a new challenge for law enforcement agencies, because it will be easier than ever for malicious actors to perpetrate criminal activities without the necessary prior knowledge,” she stressed.

Not to mention that ChatGPT can itself be diverted from its intended uses to be used for illegal purposes. Because the conversational agent can, for example, write professional emails, cover letters, cvs, purchase orders, etc. On its own, it can eliminate some of the most common indicators of an email phishing, spelling mistakes and grammar problems.

Source: “ZDNet.com “